Citizen's preferences and the 15-minutes city

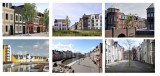

For decades, the behaviour of urban planners and politicians, but also of residents, has been determined by images of the ideal living environment, especially for those who can afford it. The single-family home, a private garden and the car in front of the door were more prominent parts of those images than living in an inclusive and complete neighbourhood. Nevertheless, such a neighbourhood, including a 'house from the 30s', is still sought after. Attempts to revive the idea of 'trese 'traditional' neighbourhoods' have been made in several places in the Netherlands by architects inspired by the principles of 'new urbanism' (see photo collage above). In these neighbourhoods, adding a variety of functions was and is one of the starting points. But whether residents of such a neighbourhood will indeed behave more 'locally' and leave their cars at home more often does not depend on a planning concept, but on long-term behavioural change.

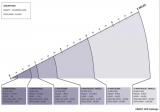

An important question is what changes in the living environment residents themselves prefer. Principles for the (re)design of space that are in line with this have the greatest chance of being put into practice. It would be good to take stock of these preferences, confront (future) residents conflicting ideas en preconditions, for instance with regard to the necessary density. Below is a number of options, in line with commonly expressed preferences.

1. Playing space for children

Especially parents with children want more playing space for their children. For the youngest children directly near the house, for older children on larger playgrounds. A desire that is in easy reach in new neighbourhoods, but more difficult in older ones that are already full of cars. Some parents have long been happy with the possibility of occasionally turning a street into a play street. A careful inventory often reveals the existence of surprisingly many unused spaces. Furthermore, some widening of the pavements is almost always necessary, even if it costs parking space.

2. Safety

High on the agenda of many parents are pedestrian and cycle paths that cross car routes unevenly. Such connections substantially widen children's radius. In existing neighbourhoods, this too remains daydreaming. What can be done here is to reduce the speed of traffic, ban through traffic and make cars 'guests' in the remaining streets.

3. Green

A green-blue infrastructure, penetrating deep into the immediate surroundings is not only desired by almost everyone, but also has many health benefits. The presence of (safe) water buffering (wadis and overflow ponds) extends children's play opportunities, but does take up space. In old housing estates, not much more is possible in this area than façade gardens on (widened) pavements and vegetation against walls.

4. Limiting space for cars

Even in older neighbourhoods, opportunities to play safely and to create more green space are increased by closing (parts of) streets to cars. A pain point for some residents. One option for this is to make the middle part of a street car-free and design it as an attractive green residential area with play opportunities for children of different age groups. In new housing estates, much more is possible and it hurts to see how conventionally and car-centred these are often still laid out. (Paid) parking at the edge of the neighbourhood helps create a level playing field for car and public transport use.

5. Public space and (shopping) facilities

Sometimes it is possible to turn an intersection, where for instance a café or one or more shops are already located, into a cosy little square. Neighbourhood shops tend to struggle. Many people are used to taking the car to a supermarket once a week to stock up on daily necessities for the whole week. However, some neighbourhoods are big enough for a supermarket. In some cities, where car ownership is no longer taken for granted, a viable range of shops can develop in such a square and along adjacent streets. Greater density also contributes to this.

6. Mix of people and functions

A diverse range of housing types and forms is appreciated. Mixing residential and commercial properties can also contribute to the liveliness of a neighbourhood. For new housing estates, this is increasingly becoming a starting point. For business properties, accessibility remains an important precondition.

7. Public transport

The desirability of good public transport is widely supported, but in practice many people still often choose the car, even if there are good connections. Good public transport benefits from the ease and speed with which other parts of the city can be reached. This usually requires more than one line. Free bus and tram lanes are an absolute prerequisite. In the (distant) future, autonomous shuttles could significantly lower the threshold for using public transport. Company car plus free petrol is the worst way to encourage sensible car use.

8. Centres in plural

The presence of a city centre is less important for a medium-sized city, say the size of a 15-minute cycle zone, than the presence of a few smaller centres, each with its own charm, close to where people live. These can be neighbourhood (shopping) centres, where you are sure to meet acquaintances. Some of these will also attract residents from other neighbourhoods, who walk or cycle to enjoy the wider range of amenities. The presence of attractive alternatives to the 'traditional' city centre will greatly reduce the need to travel long distances.

The above measures are not a roadmap for the development of a 15-minute city; rather, they are conditions for the growth of a liveable city in general. In practice, its characteristics certainly correspond to what proponents envisage with a 15-minute city. The man behind the transformation of Paris into a 15-minute city, Carlos Moreno, has formulated a series of pointers based on all the practical examples to date, which can help citizens and administrators realise the merits of the 15-minute city in their own environments. This book will be available from mid-June 2024 and can be reserved HERE.

For now, this is the last of the hundreds of posts on education, organisation and environment I have published over the past decade. If I report again, it will be in response to special events and circumstances and developments, which I will certainly continue to follow. Meanwhile, I have started a new series of posts on music, an old love of mine. Check out the 'Expedition music' website at hermanvandenbosch.online. Versions in English of the posts on this website will be available at hermanvandenbosch.com.